What's the Best Computing Infrastructure for AI? | Data Center Knowledge | News and analysis for the data center industry

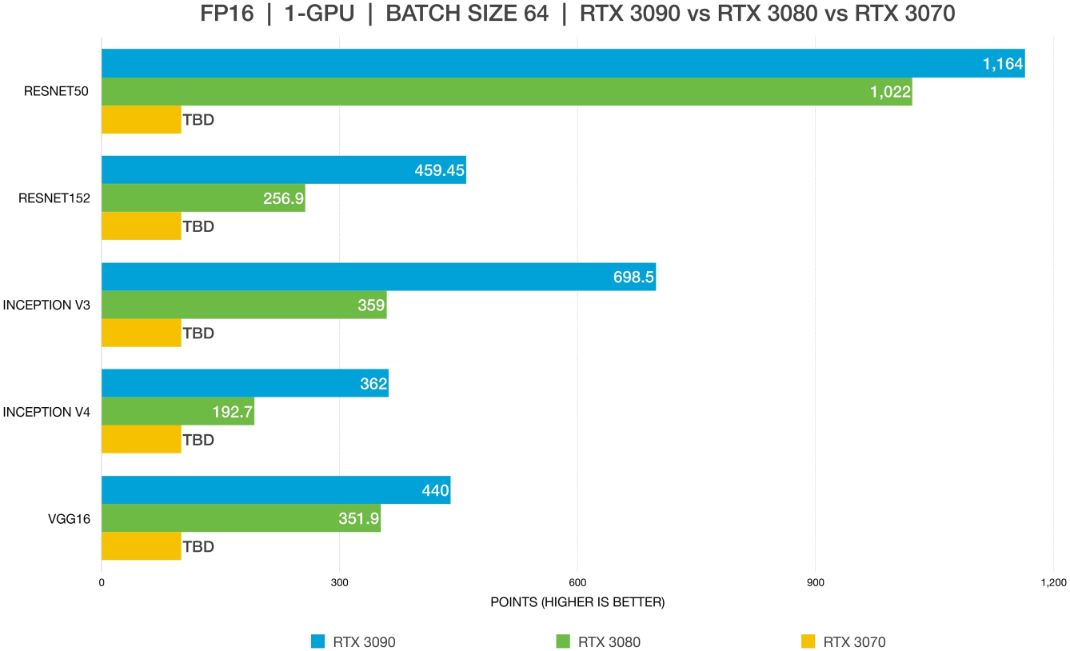

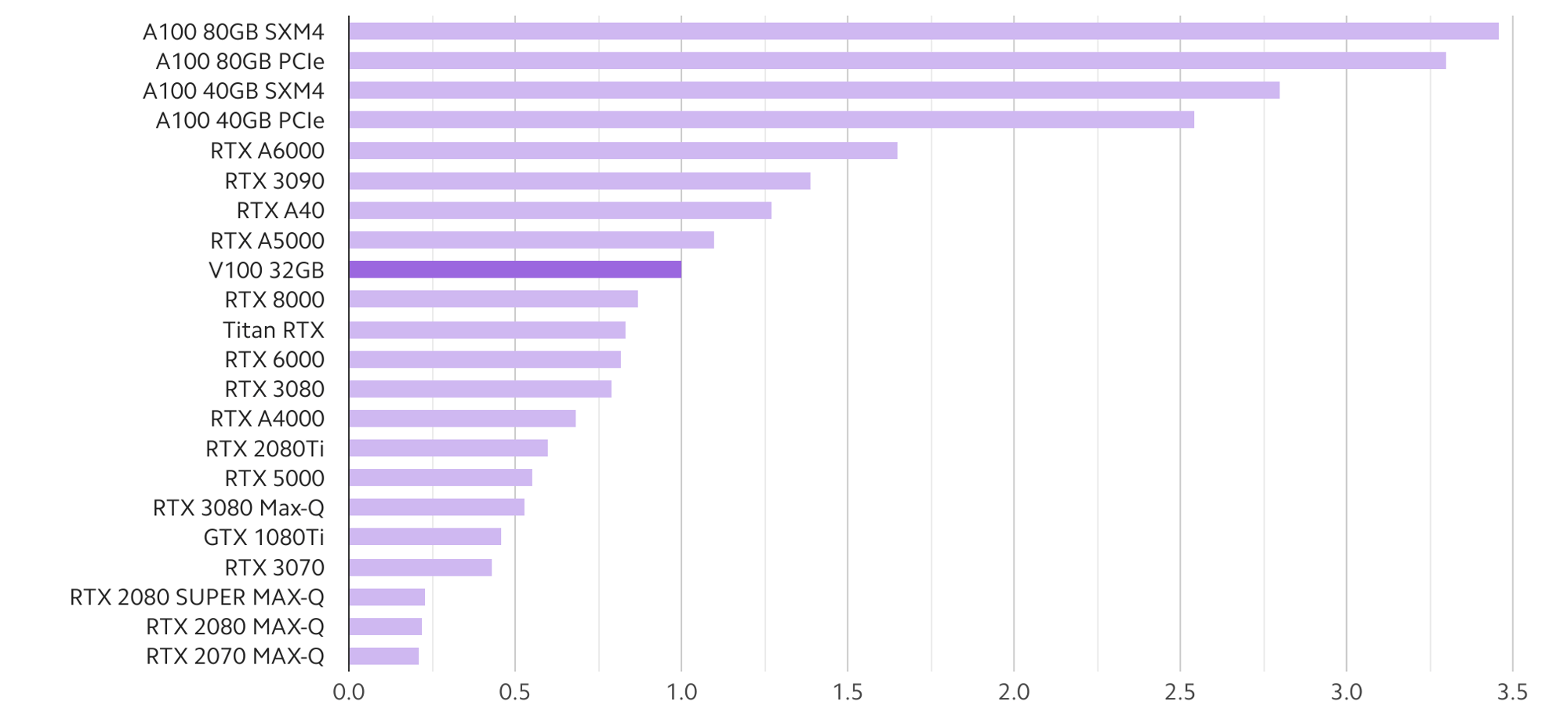

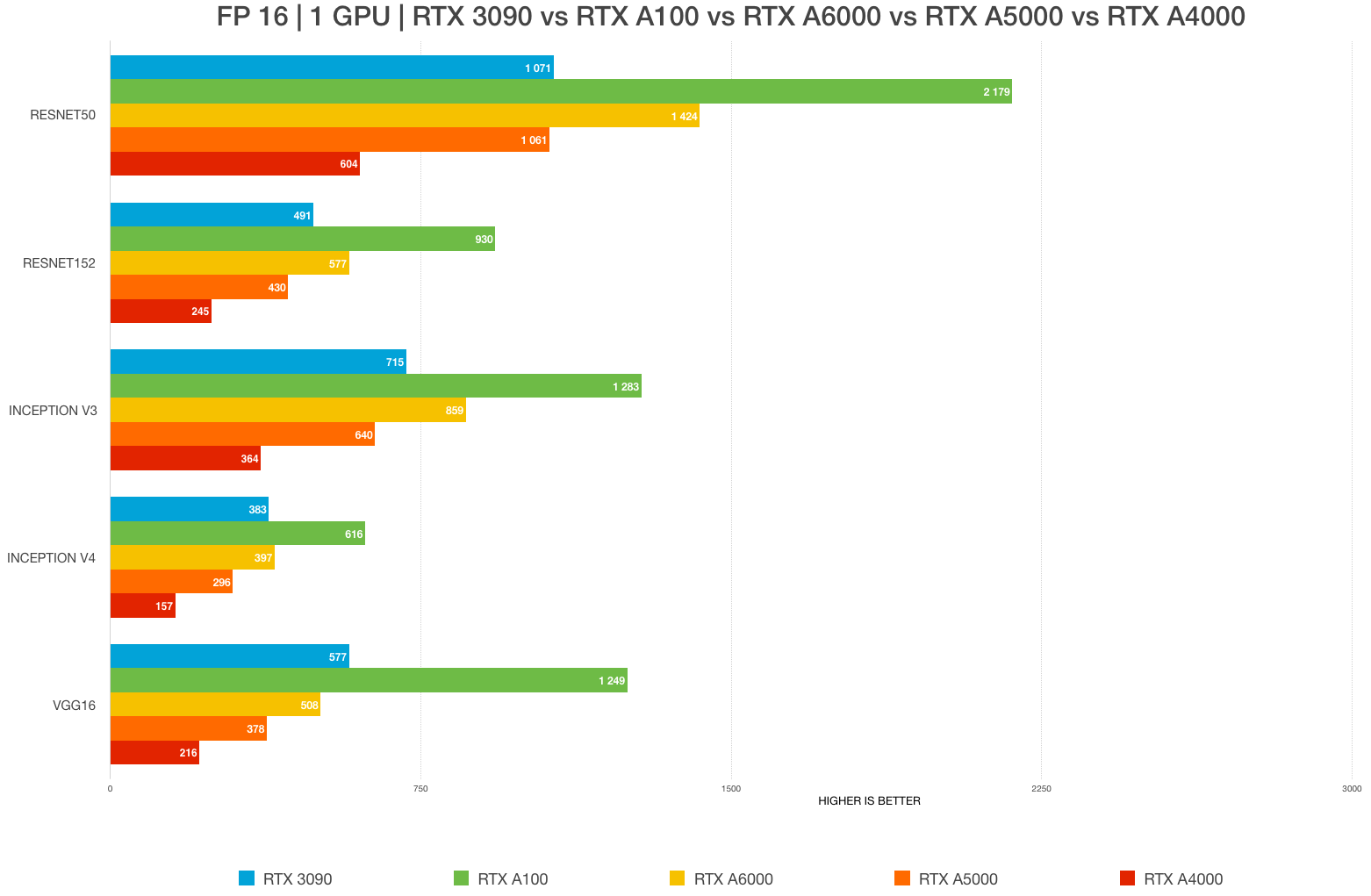

Best GPU for AI/ML, deep learning, data science in 2023: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON